an RTX 2080 Ti retains X% lead over Radeon VII in both tests, where X doesn’t change, then it is acceptable as a synthetic gauge of performance. Now, as for what this means, it’s pretty straight-forward: As long as the card-to-card scaling remains equally spaced in the built-in test as it is in the game, e.g. We then hit 48FPS AVG with RTX Ultra, a drop of about 9% from High. As we descend the chart, we eventually see that the 2080 Ti hits 53FPS AVG with RTX on High and graphics set to Ultra with in-game performance, versus 36FPS for the built-in test. If ever there were any question as to whether the built-in benchmark is an accurate depiction of gameplay, this answers it by three entire drops in graphics quality.įor Extreme settings, we hit 70FPS AVG without any RTX enabled and 40FPS with all graphics settings maxed.

For the next data point, we have to highlight something important: With Ultra settings and RTX off, we’re still running at 87FPS AVG, which is technically better than the built-in benchmark’s 84FPS AVG starting point at Low settings. This further drops to 60FPS AVG for High settings with the built-in benchmark and 94FPS AVG for the in-game test. At medium settings and with RTX off, we found performance decay to 72FPS AVG for the built-in test and 118FPS AVG for actual gameplay. As always, this is tested multiple times and averaged for parity. We found that the built-in benchmark operated at only 84FPS AVG with the 2080 Ti at 4K and low settings, with RTX off, whereas our in-game testing posted 152FPS AVG performance results. We’re starting with only the 2080 Ti at 4K resolution, scaling across low to extreme settings and with varying RTX configurations. With other games, like Civilization VI, we have found that the turn time benchmarks are accurate to actual gameplay.įor Metro: Exodus, we’ll start by checking actual in-game performance versus the benchmark scene. With Final Fantasy, we discovered that the game was improperly culling some objects to the extent that they affected performance from miles away. One of the most important things to look at with a major game launch is whether its built-in benchmark is actually reliable. Resolution as defined on chart Built-in Benchmark vs. Test settings for the game are as follows: Often the company that makes the card, but sometimes us (see article) The only (really) important thing is that performance scaling is consistent between cards in both pre-built benchmarks and in-game benchmarks. That’s completely fine, too, as that’s mostly what we look for in reviews. Without accuracy to in-game performance, the benchmark tools mostly become synthetic benchmarks: They’re good for relative performance measurements between cards, but not necessarily absolute performance. Being inconsistent with in-game performance doesn’t necessarily invalidate a benchmark’s usefulness, though, it’s just that the light in which that benchmark is viewed must be kept in mind. As always, with a built-in benchmark, one of the most important things to look at is the accuracy of that benchmark as it pertains to the “real” game.

#WHERE IS THE METRO LAST LIGHT BENCHMARK SERIES#

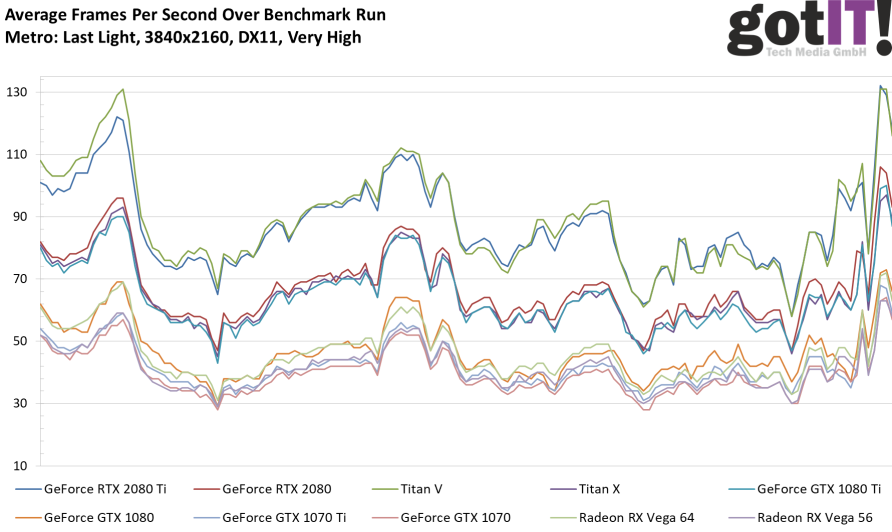

The Metro series has long been used as a benchmarking standard. We already looked at the RTX implementation from a qualitative standpoint (in video), talking about the pros and cons of global illumination via RTX, and now we’re back to benchmark the performance from a quantitative standpoint. Metro: Exodus is the next title to include NVIDIA RTX technology, leveraging Microsoft’s DXR.

0 kommentar(er)

0 kommentar(er)